Information architecture is defined by the Information Architecture Institute as the art and science of organizing and labeling web sites, intranets, online communities, and software to support findability and usability.

Information architecture is the term used to describe the structure of a system, i.e the way information is grouped, the navigation methods and terminology used within the system. An effective information architecture enables people to step logically through a system confident they are getting closer to the information they require.

Information architecture is most commonly associated with websites and intranets, content management systems, but it can be used in the context of any information structures or computer systems.

Information architecture involves the categorization of information into a coherent structure, preferably one that the intended audience can understand quickly, if not inherently, and then easily retrieve the information for which they are searching. The organization structure is usually hierarchical.

Organizing functionality and content into a structure that people are able to navigate intuitively doesn’t happen by chance. Organizations must recognize the importance of information architecture or else they run the risk of creating great content and functionality that no one can ever find. Most people only notice information architecture when it is poor and stops them from finding the information they require.

An effective information architecture comes from understanding business objectives and constraints, the content, and the requirements of the people that will use the site.

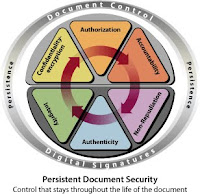

Information architecture is often described using the following diagram:

Business/Context

Understanding an organization's business objectives, politics, culture, technology, resources and constraints is essential before considering development of the information architecture.

Techniques for understanding context include:

- reading existing documentation;

- mission statements, organization charts, previous research and vision documents are a quick way of building up an understanding of the context in which the system must work;

- stakeholder interviews;

- speaking to stakeholders provides valuable insight into business context and can unearth previously unknown objectives and issues.

Content

The most effective method for understanding the quantity and quality of content (i.e. functionality and information) proposed for a system is to conduct a content inventory. Content inventories identify all of the proposed content for a system, where the content currently resides, who owns it and any existing relationships between content. Content inventories are also commonly used to aid the process of migrating content between the old and new systems.

Users

An effective information architecture must reflect the way people think about the subject matter. Techniques for getting users involved in the creation of an information architecture include card sorting and card-based classification evaluation.

Card sorting involves representative users sorting a series of cards, each labelled with a piece of content or functionality, into groups that make sense to them. Card sorting generates ideas for how information could be grouped and labelled.

Card-based classification evaluation is a technique for testing an information architecture before it has been implemented.

The technique involves writing each level of an information architecture on a large card, and developing a set of information-seeking tasks for people to perform using the architecture.

More about information architecture next time...